The Manufacturing of Time¶

In philosophy, the analysis of time by Kant distinguishes between two temporal entities, instant and duration, that are linked by three temporal modes or relationships: succession, simultaneity and permanence.

This analysis can be used to classify programming languages and computer music systems by their handling of instant and duration:

-

Dealing with succession and simultaneity of instants leads to the event-triggered or event-driven view, where a processing activity is initiated as a consequence of the occurrence of a significant event. For instance, this is the underlying model of time in MIDI.

-

Managing duration and permanence points to a time-triggered or time-driven view, where activities are initiated periodically at predetermined points in real-time and last. This is the usual approach in audio computation.

These two points of view1 are supported in Antescofo and the composer/programmer can express his own musical processes in the most appropriate style. We will elaborate on this point, along with some comparison with ChucK to better fix the idea. Indeed, ChucK exhibits a complete and coherent model of time, relevant to both audio processing and the handling of asynchronous events like MIDI and OSC messages or interactions with serial, and human interface devices. In the next section, the fabric of time, we will discuss the unique capacity of Antescofo to specify and manage several time references.

Instants and Succession: Sequential Languages¶

Sequential programming languages usually deal only with instants (which are the location in time of elementary computations) and their succession. The actual duration of a computation does not matter, nor does the interval of time between two instants: these instants are events.

This model is that of MIDI: basic events are note on and note off messages. There is no notion of duration in MIDI: the duration of a note is represented by the interval of time between a note on and the corresponding note off and it has to be managed externally to the MIDI device, e.g. by a sequencer. In addition, two MIDI events cannot happen simultaneously. So we cannot say for instance that a chord starts at some point in time, because starting the emission of the notes of the chords are distinct sequential events.

Instants, Succession and Simultaneity: Synchronous Languages¶

In a purely sequential programming language, it is very difficult to do something at a given date. We can imagine a mechanism that suspends the execution for a given duration and wakes up at the given date, as in

sleep(12 p.m. - now()) ;

computation to do at 12 p.m.

or if we have a mechanism that suspends the execution until the arrival of a date or the occurrence of an event:

wait(12 p.m.) ;

computation to do at 12 p.m.

wait(MIDI message) ;

process received message

Notice that the computation resumes after the date or the event. On a practical level, this is usually negligible (e.g. usually chords can be emulated in MIDI using successive events). However, at a conceptual level, it means that simultaneity cannot be directly expressed in the language, which will make the specification of some temporal behaviors more difficult.

To express simultaneity in the previous code fragment, we have to imagine that computations happen infinitely fast, allowing events to be considered atomic and truly synchronous. This is the synchrony hypothesis whose consequences have been investigated in the development of synchronous languages dedicated to the development of real-time embedded systems like Esterel, Lustre or Lucid Synchrone.

Synchronous Languages¶

Synchronous languages have not only postulated infinitely fast computations, allowing two computations to occur simultaneously, they have also postulated that two computations occurring at the same instant are nevertheless ordered. This marks a strong difference between simultaneity and parallelism (more on this below) and articulates, in an odd way, succession and simultaneity2.

However, a formal model such as superdense time shows that there are no logical flaws in the idea that actions occurring at the same date are performed in a specific order (see next paragraph). Much better, this hypothesis reconciles determinism with the modularity and expressiveness of concurrency: at a certain abstraction level, we may assume that an action takes no time to be performed (i.e. its execution time is negligible at this abstraction level) and we may assume a sequential execution model (the sequence of actions is performed in a specific and well determined order) which imply deterministic and predictable behavior. Such determinism can lead to programs that are significantly easier to specify, debug, and analyze than nondeterministic ones.

A good example of the relevance of the synchrony hypothesis in the design of real-time systems is the sending of messages, in MAX or PureData, to control some device. To change the frequency of a sine-wave generator, the generator must have already been turned on. But there is no point in postulating an actual delay between turning on the generator and changing its frequency default value. The corresponding two messages are sent in the same logical instant but in a specific order.

Another example is audio processing when computations are described by a

global dataflow graph. From the audio device perspective, time is

discretized in instants corresponding to the input and the output of an

audio buffer. In one of these instants, all computations described by

the global graph happen together. However, in this instant, computations

are ordered, e.g. by traversing the audio graph in depth-first order,

starting from one of several well-known sinks, such as dac. Each audio

processing node connected to the dac is asked to compute and return

the next buffer, recursively requesting the output of upstream nodes.

In section Thickness of an Instant we investigate the notion of an action's priority used to totally order the actions that occur within the same instant, even if they are not structurally related in the program.

Superdense Time¶

For the curious reader, we give here a brief account of superdense time3, a simple formal model of time supporting the synchrony hypothesis. This approach is used by the Antescofo language for implementing a total order between actions execution.

Given a model of time T that defines a set of ordered instants, a superdense time SD_{[T]} is built on top of T to enable simultaneous but totally ordered activities on the same instant. An instant in SD_{[T]} is a pair (t, n) where t is an instant of T and n is a microstep (an infinitesimally small unit of time occuring within a logical instant):

-

t represents the date at which some event occurs,

-

and n represents the sequencing of events that occurs simultaneously.

So, two dates (t_1, n_1) and (t_2, n_2) are interpreted as (weakly) simultaneous if t_1 = t_2, and strongly simultaneous if, in addition, n_1 = n_2.

Thus, an event at (t_1, n_1) is considered to occur before another at (t_2, n_2) if either t_1 < t_2, or t_1 = t_2 and n_1 < n_2. In other word, SD_{[T]} is ordered lexicographically.

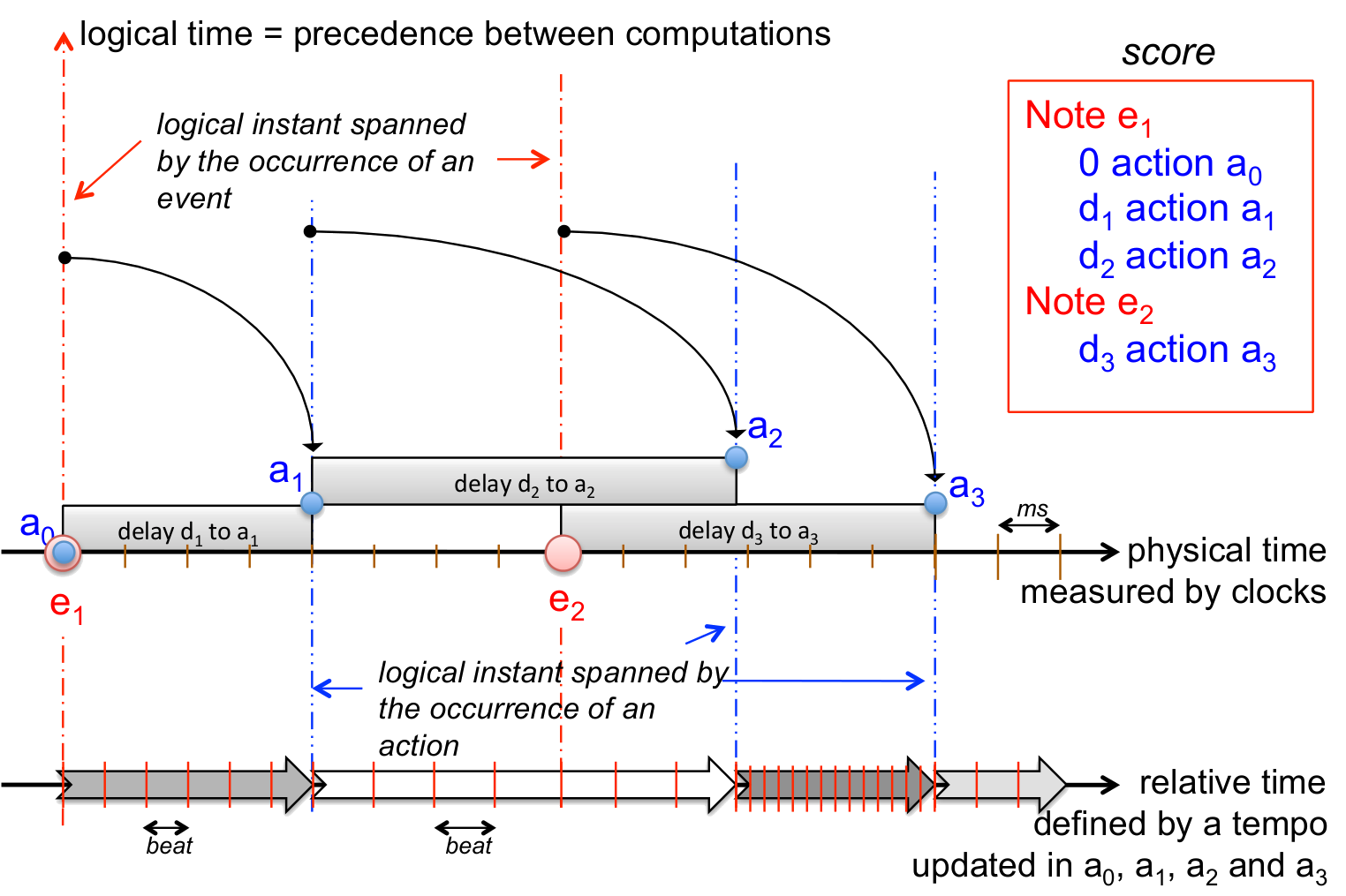

How does this relate to Antescofo ? In the figure below, the sequence of synchronous actions appears in the vertical axis. So this axis corresponds to the dependency between simultaneous computations. Notice that the (vertical) height of a box is used to represent the logical dependencies while the (horizontal) length of a box represents a duration in time. Note for example that even if durations of a_1 and a_2 are both zero, the execution order of actions a_0, a_1 and a_2 is the same as the appearance order in the score.

A delay, a period in a loop or a sample in a curve, correspond to a progression on the horizontal axis. When these quantities are expressed in relative time, they depends on a tempo which can be dynamic (i;e., it can change with the passing of time). Dynamic tempo correspond to shrink or to dilate the horizontal axis, but the order of events on the timeline is preserved.

Causality between computations (e.g. the evaluation of a sum must done after the evaluation of the arguments of the sum) corresponds to succession on the vertical axis. Causality is not enough to give a complete ordering of simultaneous action. For example, between two simultaneous assignments:

let $x := $x + $y

let $y := $x + $y + 1

the final result is not the same following the succession of assignments performed by the interpreter. Action priority is used to decide which one must be performed first. And in Antescofo, the first assignment occuring in the score is performed first (as in mainstream sequential programming languages).

Duration : Audio Processing Languages¶

In time-triggered system, activities are performed periodically, that is at time points predefined by a given duration. This duration matches the dynamics of these activities. In these systems, the computation are driven by the passing of time, not by the occurences of logical events like in event triggered systems.

Audio computations are very often architectured as time-triggered systems. The audio signal is sampled periodically. Because of efficiency issues, block processing4 is implemented by grouping samples into an audio buffer of fixed size matching a definite duration of the audio signal. Audio buffers are processed themselves periodically at an audio rate.

Duration also appears when a language offers the possibility to be woken

up after some delay. In Antescofo it can be done using the delay

before an action; in ChucK by chucking the delay to now:

// in ChucK

do someting

5.8::ms => now // advance in time

do nextthing

// in Antescofo

do something

5.8 ms // wait 5.8 milliseconds

do nextthing

So, to trigger an activity periodically, such as by filling a buffer of 64 samples at 44100 Hz (5.8 ms), one can write:

while (true)

{

do something

5.8::ms => now

}

Loop 5.8 ms

{

do something

}

These two code snippets in ChucK and in Antescofo seem very similar but their interpretations differ greatly. The ChucK program is a sequential program that is stopped for a given duration: any code between instructions to advance time can be considered atomic (i.e. presumed to happen instantaneously at a single point in time). The Antescofo code describes a parallel program where some actions have to be iterated every duration (iterations are supposed to take place independently, such that they can eventually overlap). These actions can be atomic or have their own duration.

This example exhibits one of the differences between the approaches of ChucK and Antescofo: ChucK takes a view where the computations happen infinitely fast and sequentially, while Antescofo's approach is to view computation as infinitely fast and in parallel.

This formulation seems absurd until one realizes that, here, parallelism

refers to a logical notion related to the structure of the program

evaluation and is not related to the time at which computations occur. A

program is parallel if the progression of the computation is described

by several threads (and each thread correspond to a succession of

actions). In the case of ChucK, all control structures are sequential,

except the explicit thread creation operation spork. On the other

hand, in Antescofo threads are implicitly derived from the nested

structure of compound actions: every actions spans new threads for their

child actions (except with the ==> and +=>

continuation operators). See table below:

| Implicit Threads | One thread | Explicit Threads | |

|---|---|---|---|

| instruction counter | 0 | 1 | n |

| examples | PureData, Antescofo | C, Java, Python | Occam, C+threads, ChucK |

| thread creation | implicit through data and control dependencies | ⸏ | through explicit operator par, spork, fork, ... |

For example, the program

Group G

{

d₁ a₁

d₂ a₂

d₃ a₃

}

aᵢ in parallel:

(

d₁ a₁) \parallel ((d₁+d₂) a₂) \parallel ((d₁+d₂+d₃) a₃)

It is because of the cumalative delays that the group G seems

a sequential construct. But without delays, it is apparent that the

actions aᵢ are spanned in parallel. The ChucK program

that really mimics the Antescofo Loop, has to

explicitly use shreds (i.e. ChucK threads) to make the while bodies

independent5:

fun loop_body()

{

do something

}

while (true)

{

spork ~ loop_body

5.8::ms => now

}

Loop 5.8 ms

{

do something

}

Notice a benefit of the synchrony hypothesis: synchronous programs are sequential, even in the presence of implicit or explicit threads. So there is no need for locks, semaphores, or other synchronization mechanisms. Actions that occur simultaneously execute sequentially and without preemption, behaving naturally as atomic transactions with respect to variable updates.

Supporting Event and Duration¶

Real-life problems dictate the handling of both events and duration: music usually involves, in addition to audio processing, the handling of events that are asynchronous relative to DSP computations like MIDI and OSC messages or interactions with human interface devices.

Subsuming the event-driven and the time-driven architectures is usually achieved by embedding the event-driven view in the time-driven approach. The handling of events is delayed and taken into account periodically, leading to several internally maintained rates, e.g., an audio rate for audio, a control rate for messages, a refresh rate for the user-interface, etc. This is the case for systems like Max or PureData where a distinct control rate is defined. Notice that this control rate is typically about 1ms, which can be finer that a typical audio rate (a buffer of 256 samples at sampling rate of 44100Hz gives an audio rate of 5.8 ms), but the control computation can sometimes be interrupted to avoid delaying audio processing (e.g. in Max). In Faust, events are managed at buffer boundaries, i.e. at the audio rate.

The alternative is to subsume the the two views by embedding the time-driven computation in an event-driven architecture. After all, periodic activity can be driven by the events of a periodic clock. Thus, the difference between waiting for the expiration of a duration and waiting the occurrence of a logical event is that, in the former case, a time of arrival can be precomputed.

This approach has been investigated by ChucK where the handling of audio is done at the audio sample level. Computing the next sample is an event interleaved with the other events. It results in tightly interleaved control over audio computation, allowing the programmer to at handle at the same time processing and higher levels of musical and interactive control6.

This approach is also the Antescofo approach7 where instants/events can be specified:

-

by the performance of a musical event,

-

by the reception of a message (OSC or Max/PD),

-

by a duration starting from the occurrence of another event (the duration can be in absolute time or in relative time),

-

by the start of an action,

-

by the end of an action,

-

by the satisfaction of a logical condition when a variable is assigned.

Duration appears in the delay preceding an action, in the period of a loop and in the sampling of a curve. Antescofo delays may be expressed in physical time (seconds, milliseconds) or in relative time (beat).

This feature is unique to Antescofo: in other computer music languages, a duration can be expressed in seconds, in milliseconds or even in samples, but these different units refer to the same physical time. Relative time in Antescofo is not linked to the physical time by a simple change of unit: it involves complex and dynamic relationships between the potential timing expressed in the score and actual timing of the performance. The correspondance between the two is not known a priori but builds incrementally with the passing of time during the performance. This problem is investigated in the next section.

-

Event-Triggered versus Time-Triggered Real-Time Systems In Proceedings of the International Workshop on Operating Systems of the 90s and Beyond, Vol. 563. Springer, Dagstuhl Castle, Germany, 87–101. ↩

-

Given two actions, one always precedes the other, but some successive actions can be simultaneous. Actions that occur simultaneously occur in the same logical instant. Logical instants are ordered completely by succession, just like actions within an instant. ↩

-

Claudius Ptolemaeus, Editor, System Design, Modeling, and Simulation using Ptolemy II, Ptolemy.org, 2014. (section 1.7.2) ↩

-

The performance benefits of block processing are due to certain compiler optimizations, instruction pipelining, memory compaction, better cache reuse, etc. ↩

-

The dual question is the translation of the ChucK

while (true) { ... }construction in Antescofo. In Antescofo view, this construction should be avoided because it would lead to an infinite number of computations in finite time (if the body of thewhileis an instantaneous action).

At the end of the day, throwing away all temporal abstractions, a computation takes finite physical time, so an infinite number of instantaneous computations will take an unbounded quantity of physical time, violating initial assumptions. Such behavior should be avoided.

This explains why there is nowhileconstruct in Antescofo: awhileconstruct makes the superdensetime to progress on the vertical axis, and implementability requires finite height in the superdense time. On the other hand, aloopconstruct makes the time to progress on the horizontal axis, if the period is non null, and this axis can be unbounded.

Awhile (true) { ... }construct (unbounded recursion) cannot be achieved in Antescofo using a loop with a period of zeroLoop 0ms { ... }because the run-time imposes a finite number of consecutive iterations with a period 0 if there is no explicit end clause. If this limit is reached, the loop is aborted and an error is signaled. There is no danger from theForAllconstruct: if it spans its body in parallel, the number of spanned groups is bounded by the size of a data structure (mimicking primitive recursion).

However, there exist some means to specify an unbounded number of actions in Antescofo, and if these actions are all instantaneous (no delay, zero period, zero grain, _etc.) it could potentially lead to an infinite number of actions in finite time. Antescofo allows loops with zero period if an end clause is specified, soLoop 0ms {... } while (true)will hang the execution. It is also possible to define a recursive process calling itself before doing any others action or delays. It is also possible to specify an expression whose evaluation would lead to infinite computations in finite time (e.g. the call to a recursive function). ↩ -

It can also be argued that ChucK is a purely time-driven architecture, with a control rate equating to the signal sampling rate. Because the corresponding duration d is very small, and used both for the audio rate and the control rate, the distinction we made between the event-driven and the audio-driven architecture is blurred: one can understand d as the precision of locating an event in time. ↩

-

Audio processing in Antescofo is still experimental. Sample accuracy is achieved for control values corresponding a curve (irrespective of the @grain sample rate of the curve). Beyond that, our current research work is an attempt to consolidate sample accuracy and block computations through a notion of elastic audio buffer. ↩