Antescofo: A First User Guide

Introduction¶

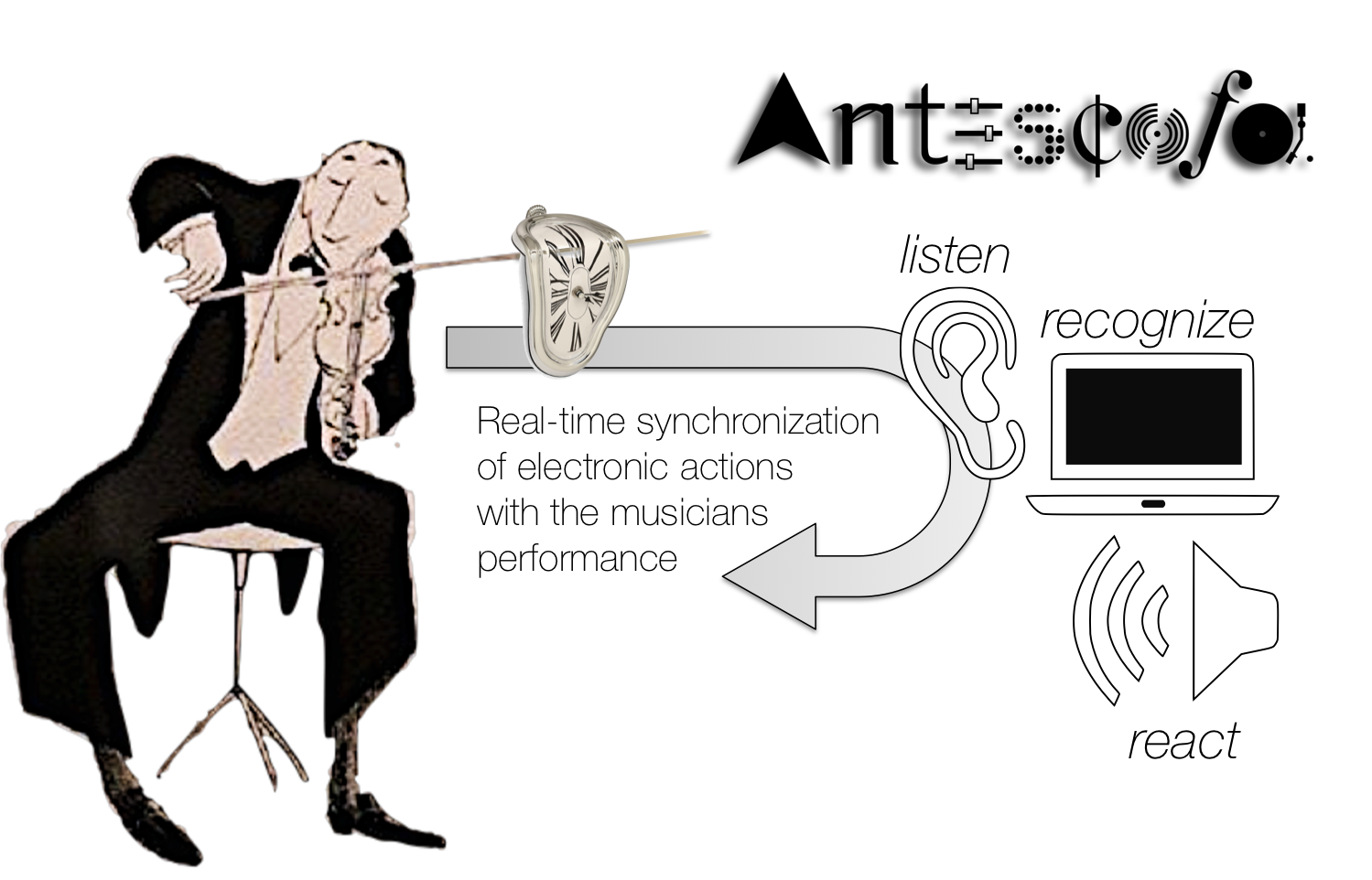

The Antescofo system couples machine listening and a specific programming language for compositional and performative purposes. It allows real-time synchronization of human musicians with computers during live performance.

This User Guide gives a bird's eye view of the Antescofo system:

| Introduction | ||

|---|---|---|

| a brief introduction on Interactive Music Systems and score following (cf. below) |

a presentation of the Structure of an Augmented Score in Antescofo which specifies the musical events that will be recognized in the audio stream together with the actions to trigger in time | a short introduction on Events and Actions, the basic elements of an augmented score |

| Overview | |

|---|---|

| an overview of Antescofo Features |

a digression on Antescofo Model of Time which is at the heart of the unique features offered in Antescofo to synchronize a musical stream with electronic actions |

| Workflow | ||

|---|---|---|

| Authoring the Score | How to Interact with the Environment | and Preparing the Performance by tuning the listening machine, testing and debuging the system during rehearsals up to the final performance |

| Beyond score following | |

|---|---|

| Antescofo is not limited to score following and been used as an expressive programmable sequencer dealing with multiple timelines, in interactive installations, for open and dynamic scores, etc. | Experience Yourself |

Additional information is available elsewhere:

-

The Reference Manual offers a more detailed presentation of Antescofo features. T

-

The Library Functions list all predefined functions in the Antescofo library.

-

The Antescofo distribution comes with several tutorial patches for Max or PD as well as the augmented score of actual pieces.

-

The ForumUser is also a valuable source of information.

Interactive Music Systems¶

Mixed music (aka. interactive music) is the live association of acoustic instruments played by human musicians and electronic processes run on computers. Mixed music pieces feature real-time processes, as diverse as signal processing (sound effects, spatialization), signal synthesis, or message passing to multimedia software.

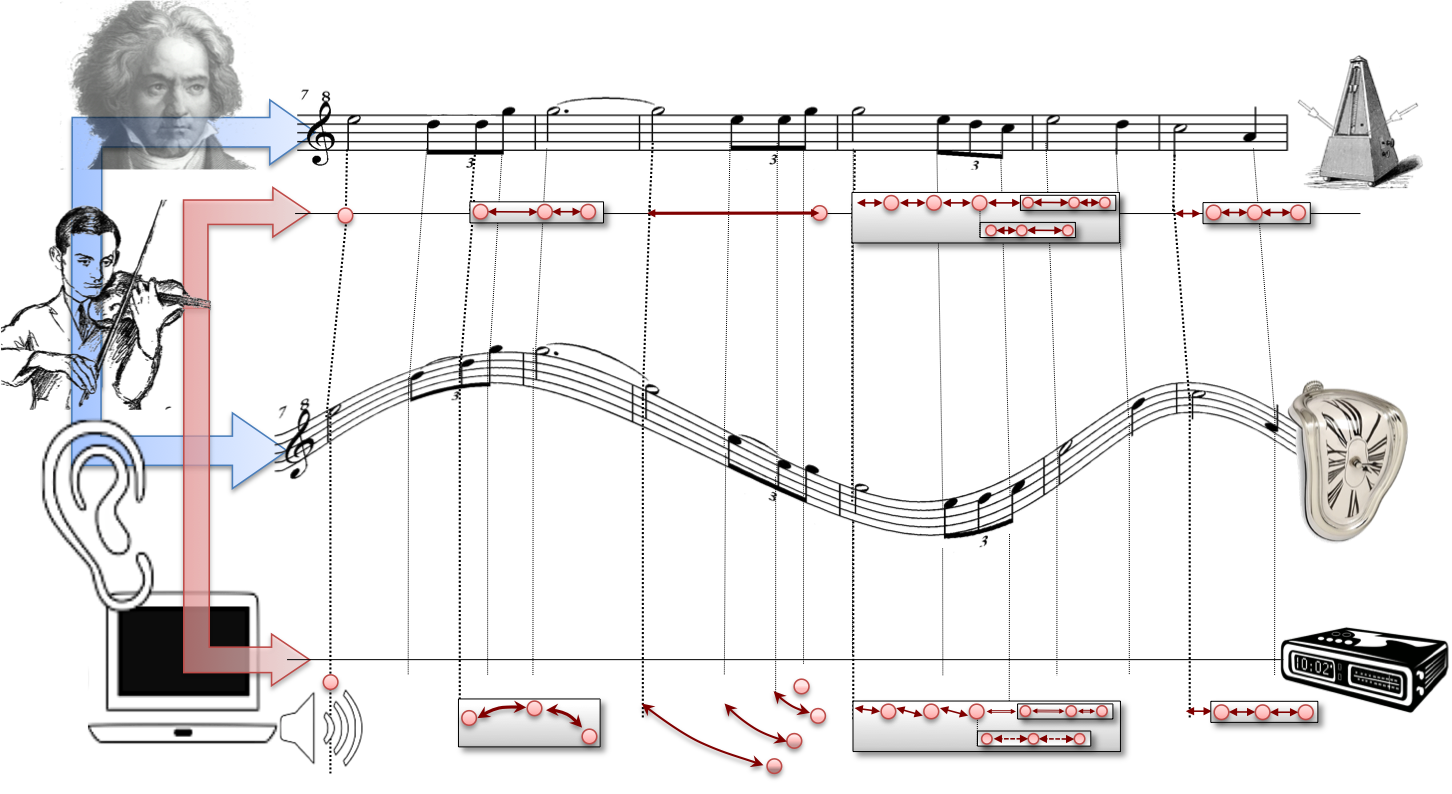

The specification of such processes and the definition of temporal constraints between musicians and electronics are critical issues in mixed music. They can be achieved through a program that connects music sheets and electronic processes. We call such a program an an augmented score.

Indeed, a music score is a key tool for composers at authoring time and for musicians at performance time. Composers traditionally organize the musical events played by musicians on a virtual time line (expressed in beats). These objects share temporal relationships, such as structures of sequences (e.g., bars) or polyphony. To encompass all aspects of a mixed music piece, electronic actions have to share the same virtual time frame of the musical events, denoted in beats, and the same organization in hierarchical and sequential structures.

During live performance, musicians interpret the score with precise and personal timing, where the score time (in beats) is evaluated into the physical time (measurable in seconds). For the same score, different interpretations lead to different temporal deviations, and musician's actual tempo can vary drastically from the nominal tempo marks. This phenomenon depends on the individual performers and the interpretative context. To be executed in a musical way, electronic processes should follow the temporal deviations of the human performers.

The Antescofo approach¶

Achieving this goal starts by score following, a task defined as real-time automatic alignment of the performance (usually through its audio stream) on the music score. However, score following is only the first step toward musician-computer interaction; it enables such interactions but does not give any insight on the nature of the accompaniment and the way it is synchronized.

Antescofo is built on the strong coupling of machine listening and a specific programming language for compositional and performative purposes:

-

The Listening module of Antescofo software infers the variability of the performance, through score following and tempo detection algorithms.

-

And the Antescofo language

-

provides a generic expressive support for the design of complex musical scenarios between human musicians and computer mediums in real-time interactions

-

makes explicit the composer intentions on how computers and musicians are to perform together (for example should they play in a "call and response" manner, or should the musician takes the leads, etc.).

-

This way, the programmer/composer describes the interactive scenario with an augmented score, where musical objects stand next to computer programs, specifying temporal organizations for their live coordination. During each performance, human musicians “implement” the instrumental part of the score, while the system evaluates the electronic part taking into account the information provided by the listening module.

The current language is highly dynamic and addresses requests from more than 40 serious artists using the system for their own artistic creation. Besides its incremental development with users and artists, the language is inspired by Synchronous Reactive languages such as ESTEREL and Cyber-Physical Systems.

A brief history of Antescofo¶

The Antescofo project started in 2007 as a joint project between a researcher (Arshia Cont) and a composer (Marco Stroppa) with the aim of composing an interactive piece for saxophone and live computer programs where the system acts as a Cyber Physical Music System. It rapidly became a system that couples a simple action language and machine listening.

The language was further used by other composers such as Jonathan Harvey, Philippe Manoury, Emmanuel Nunes and the system has been featured in world-class music concerts with ensembles such as the Los Angeles Philharmonic, New York Philharmonic, Berlin Philharmonic, BBC Orchestra and more.

In 2011, two computer scientists (Jean-Louis Giavitto from CNRS and Florent Jacquemard from Inria) joined the team and serious development on the language started with participation of José Echeveste (whose PhD was on Antescofo unique synchronization capabilities) and Philippe Cuvilier (whose PhD was on the use of temporal information in the listening machine). The new team MuTant was baptized during early 2012 as a joint venture between Ircam, CNRS, Inria and UPMC in Paris.

In 2017, a start-up has been created by Arshia Cont, José Echeveste and Philip Cuvillier, to develop Metronaut: a tailor-made musical accompaniment app for classical musicians.

The antescofo~ object for Max and PD will always be available freely for artistic and research projects and continues to evolve to fix bugs, optimize performance and include new features in response to artists' requests and to address new creative directions.

Today Antescofo is widely used in the mixed pieces produced at IRCAM and its evolutions develop incrementally in line with user requests, both at Ircam (Jean-Louis Giavitto ) and in the start-up.

A historical example: "Anthèmes 2" by Pierre Boulez.